Bring Your "Own" Gitlab CI Runner

Gitlab boasts this nifty feature of using your own Gitlab CI Runner. But what if you’re sans a “really personal” CI Runner? Fear not, we’re about to roll up our sleeves and craft one ourselves. []~( ̄▽ ̄)~*

In this dive, we’re going to:

- Outline the core duties of a Gitlab Runner;

- Dissect the interactions between a Runner and Gitlab during operation;

- Design and implement our very own Runner;

- Bootstrap: get our Runner to run its own CI jobs.

Of course, if you’re the type to jump straight to the code, feel free to check out the Github repo. If you dig it, a star would be much appreciated.

Core Duties

Here are the essentials a Gitlab Runner must handle:

- Fetch jobs from Gitlab;

- Upon fetching, prepare a pristine, isolated, and reproducible environment;

- Execute the job within this environment, uploading logs as it goes;

- Report back the outcome (success/failure) after job completion or in case of an unexpected exit.

Our DIY Runner is expected to fulfill these tasks as well.

Peeling the Layers

Let’s sequentially unravel these core tasks and peek at how the Runner interacts with Gitlab.

For brevity, API request and response content has been condensed.

Registration

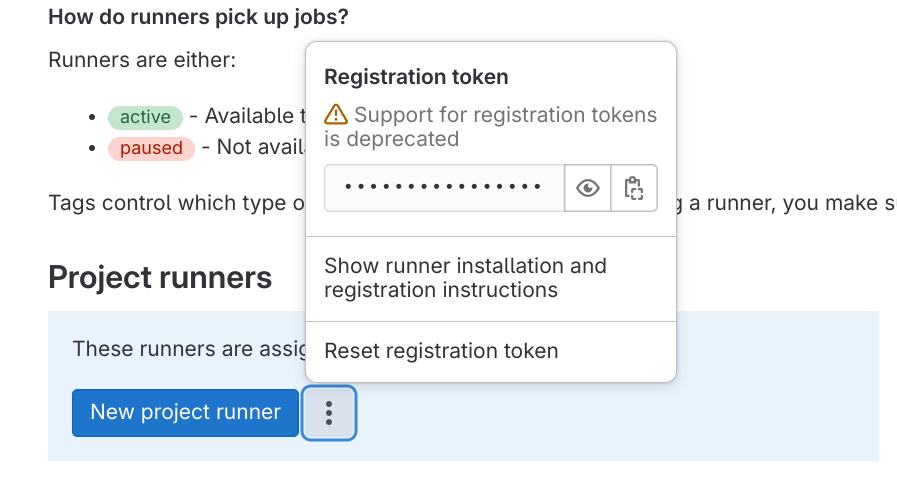

If you’ve ever set up a self-hosted Gitlab Runner, you might be familiar with this page:

Users snag a registration token from this interface, then employ the gitlab-runner register command to enlist their Runner instance with Gitlab.

This registration step essentially hits the POST /api/v4/runners endpoint, with a body like:

|

|

If the registration token is invalid, Gitlab responds with 403 Forbidden. Upon successful registration, you get:

|

|

The Runner cares mostly about the token, which represents its identity and is used for authentication in subsequent API calls. This token, along with other settings, gets stored in ~/.gitlab-runner/config.toml.

Fetching Jobs

Runners are configured with a maximum number of concurrent jobs. When running below this limit, they poll POST /api/v4/jobs/request to fetch work, with a body somewhat similar to the registration call:

|

|

If there’s no job available, Gitlab responds with a 204 No Content status, including a cursor in the header for the next request. The cursor is a random string, utilized by Gitlab’s frontend proxy (Workhorse) to decide whether to make the Runner wait (for long polling) or to pass the request directly to the backend. The cursor in Redis is updated by the backend, which notifies Workhorse through Redis Pub/Sub. Job selection is implemented as a complex SQL query on the backend.

Upon receiving a new job, Gitlab returns 201 Created with a body like:

|

|

Environment Prep and Repo Cloning

To ensure CI execution is stable and reproducible, Runner execution environments need a certain level of isolation. This is where Executors come into play, offering a variety of environments:

- Shell Executor: Easy for debugging and straightforward, but offers low isolation.

- Docker or Kubernetes Executor: Provides isolation except for the OS kernel. Highly reproducible jobs due to rich image ecosystem.

- VirtualBox or Docker Machine Executor: OS-level isolation but can be resource-intensive.

Executors provide necessary APIs for Gitlab Runner calls:

- Prepare the environment;

- Execute provided scripts and return outputs and results;

- Clean up the environment.

Cloning a repository is essentially executing a git clone within the environment:

|

|

Executing Jobs and Uploading Logs

Runner organizes all executable work into scripts, passed to the Executor for execution. The environment variables are declared at the script’s start, using export for bash environments, for example.

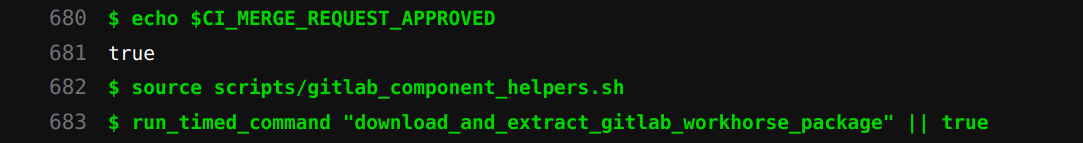

An interesting note: the green-highlighted command lines seen in CI logs are generated by Runner using echo commands with terminal color codes:

|

|

Runner captures standard output and error from the Executor, storing them in temporary files. Before job completion, Runner periodically uploads these logs to Gitlab using PATCH /api/v4/jobs/{job_id}/trace. The header of the HTTP request looks like:

Host: gitlab.example.com

User-Agent: gitlab-runner 15.2.0~beta.60.gf98d0f26 (main; go1.18.3; linux/amd64)

Content-Length: 314 # chunk length

Content-Range: 0-313 # chunk position

Content-Type: text/plain

Job-Token: jTruJD4xwEtAZo1hwtAp

Accept-Encoding: gzip

log content lies in the HTTP body, e.g.:

\x1b[0KRunning with gitlab-runner 15.2.0~beta.60.gf98d0f26 (f98d0f26)\x1b[0;m

\x1b[0K on rockgiant-1 bKzi84Wi\x1b[0;m

section_start:1663398416:prepare_executor

\x1b[0K\x1b[0K\x1b[36;1mPreparing the "docker" executor\x1b[0;m\x1b[0;m

\x1b[0KUsing Docker executor with image ubuntu:bionic ...\x1b[0;m

\x1b[0KPulling docker image ubuntu:bionic ...\x1b[0;m

Gitlab, after ingesting the logs, responds with 202 Accepted, along with headers:

Job-Status: running # current job state recorded by the server

Range: 0-1899 # current range received

X-Gitlab-Trace-Update-Interval: 60 # proposed log uploading interval

Here is an interesting optimization - when a user is watching the CI log on GitLab webpage, X-Gitlab-Trace-Update-Interval is tuned to 3, meaning the runner should upload log chunk every 3 seconds so that the user can see live-streaming of job logs.

Reporting Execution Results

Upon script completion or failure, Runner:

- Uploads any remaining logs;

- Calls

PUT /api/v4/jobs/{job_id}to update the job status with success or failure.

A successful job update might look like:

|

|

For a failed job:

|

|

Gitlab responds with 200 OK once it successfully receives the status update. If the server isn’t ready to accept the update (e.g., logs are still processing asynchronously), it returns 202 Accepted, indicating Runner should retry later, where a suggested interval lives in the response header X-GitLab-Trace-Update-Interval with a generation method like exponential backoff.

Diagram for the Process

sequenceDiagram

autonumber

Runner->>Gitlab: Request job (long poll)

Gitlab-->>Runner: Job details & credentials

Runner->>Runner: Prepare environment & clone repo

loop Execute job and upload logs

Runner->>Runner: Execute job

Runner->>Gitlab: Incrementally upload logs

end

Runner->>Gitlab: Report job completion

Runner->>Runner: Clean up and end task

Rolling Up Our Sleeves

With a comprehensive walkthrough of Gitlab Runner’s core duties, it’s time to dive into coding our very own Runner!

Naming Our Creation

I’m quite fond of egg tarts, so let’s nickname our DIY Runner “Tart”. And because every serious project needs a logo:

Armed with the blessings of the patron saint of computing, Ada Lovelace, and a logo that screams “this is definitely a serious project,” we’re all set.

Planning Features

Like Gitlab Runner, Tart is a CLI program with these main features:

- Register (register): Consumes a Gitlab registration token and spits out a TOML config to stdout. Redirecting output to a file circumvents the eternal dilemma of whether to overwrite existing configurations.

- Run a single job (single): Waits for, executes a job, reports the result, then exits. Tailored for debugging.

- Run continuously (run): Similar to

singlebut loops indefinitely, executing jobs as they come.

With spf13/cobra, we can quickly scaffold the CLI:

$ tart

An educational purpose, unofficial Gitlab Runner.

Usage:

tart [command]

Available Commands:

completion Generate the autocompletion script for the specified shell

help Help about any command

register Register self to Gitlab and print TOML config into stdout

run Listen and run CI jobs

single Listen, wait and run a single CI job, then exit

version Print version and exit

Flags:

--config string Path to the config file (default "tart.toml")

-h, --help help for tart

Use "tart [command] --help" for more information about a command.

Building an Isolated Execution Environment

Crafting an isolated execution environment is arguably the most critical task for a Runner. Ideal traits include:

- Isolation: Jobs should not affect each other or the host machine.

- Reproducibility: Identical commits should yield identical CI results.

- Host Safety: Jobs cannot compromise the host or other jobs.

- Cache-friendliness: Leveraging space to save time.

Among Gitlab Runner’s Executors, each meets these criteria to varying degrees. For Tart, let’s opt for Firecracker to build our execution environment.

Firecracker is a lightweight VM manager developed by AWS, capable of launching secure, multi-tenant container and function-based services. It starts a VM in under a second with minimal overhead, using a stripped-down device model and KVM.

Launching a CI-ready MicroVM requires:

- Linux Kernel Image: The foundation of any VM.

- TAP Device: A virtual level-2 network device for connecting the VM to the outside world.

- Root File System (rootFS): Similar to a Docker image, containing the OS and its filesystem.

For a detailed implementation, see Tart’s approach to rootFS. Notably, setting up rootFS entails:

- Preparing the Environment: Clone a rootFS template and boot up the VM.

- Executing Scripts: Here, we’ll delve into specifics shortly.

- Cleaning Up: Shut down the VM and delete the rootFS copy.

Each VM operates on a copy of the rootFS, ensuring isolation and reproducibility.

Firecracker’s simplicity means we need an agent inside the VM to execute scripts and handle outputs. SSH fits the bill perfectly:

- Pre-install sshd and public keys in the rootFS.

- Upon VM startup, Tart connects via SSH to execute commands.

- Commands run through SSH, with outputs and exit codes relayed back to Tart.

Script Execution

Creating and executing scripts involves concatenating Gitlab’s script arrays and environment variables into a single, executable bash script. Preceded by set -euo pipefail for error handling, the script includes:

- Cloning the repository and

cdinto it. - Setting environment variables with

export. - Using

set +xto echo commands before execution. - Executing user-defined scripts.

SSH forwards the script for execution, with outputs and exit codes managed by Tart and logs incrementally uploaded to Gitlab.

Bootstrapping: Tart Running Its Own CI

To test our Runner, we can have it run its own CI jobs. Here’s a .gitlab-ci.yml for Tart to handle its CI:

|

|

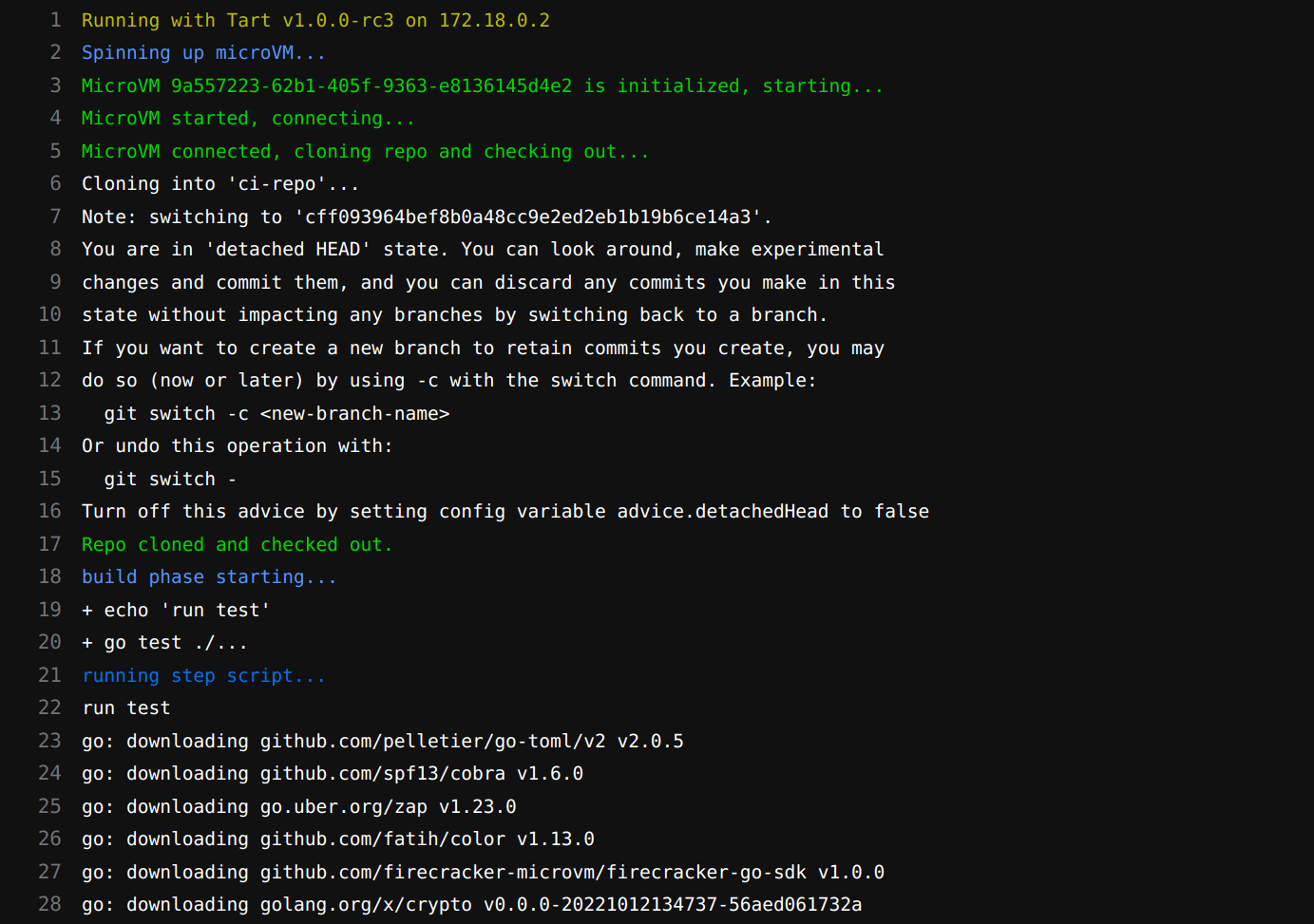

After registering Tart as a Runner for the repository and disabling shared runners to ensure the job runs on Tart, trigger a CI run. It looks like it’s working!

A Glimpse into History

In the early days (around 2014-2015), Gitlab Runner had numerous active third-party implementations. Among these, Kamil Trzciński’s Go-based GitLab CI Multi-purpose Runner caught Gitlab’s eye. This implementation replaced Gitlab’s own Ruby-based version to become what we know today as Gitlab Runner. At that time, Trzciński was working at Polidea, making the GitLab CI Multi-purpose Runner a notable community contribution. It’s a fantastic example of how open-source collaboration can lead to widely adopted solutions.